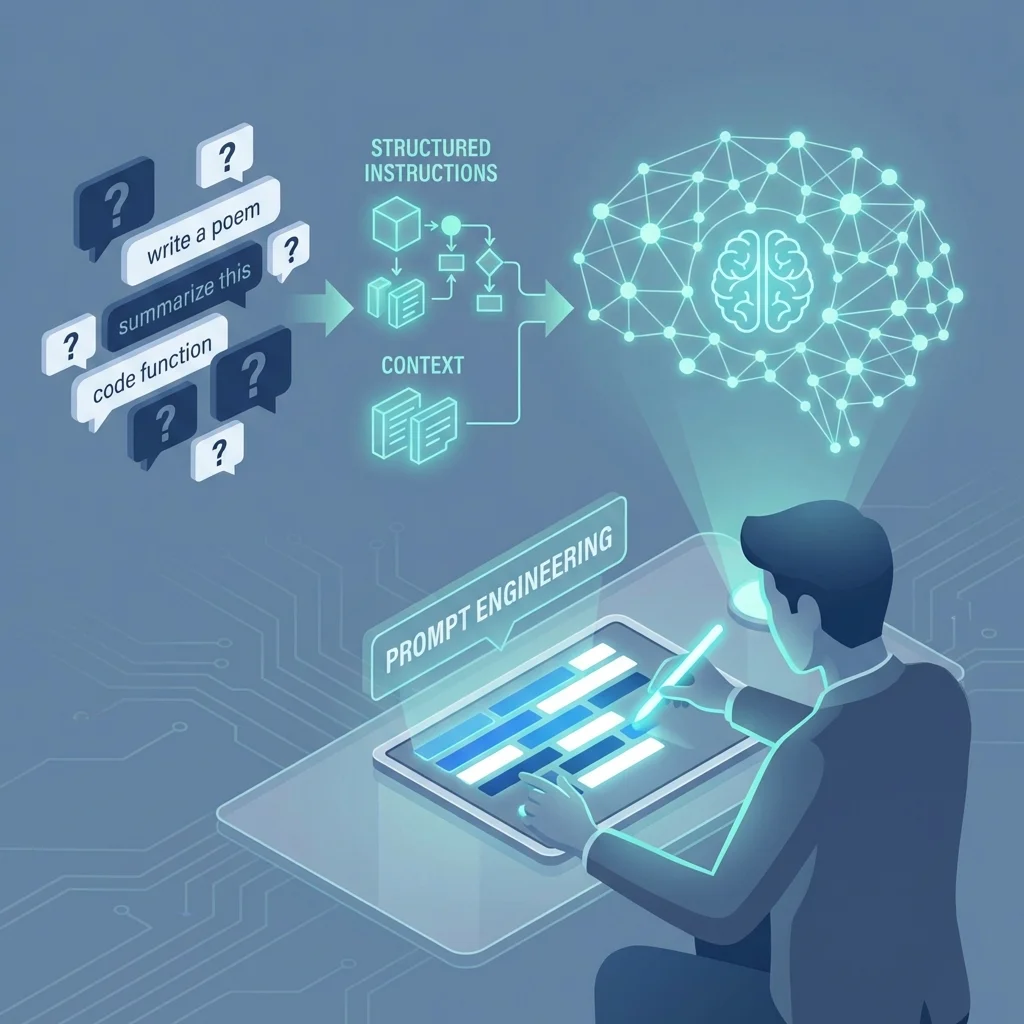

Prompt Engineering in 2026: Is It Still Relevant?

"Just talk to it naturally—prompt engineering is dead."

I've heard this claim dozens of times since GPT-4 arrived. And to be fair, AI has gotten remarkably good at understanding vague instructions.

But after two years of using advanced AI models daily, I can tell you: prompt engineering isn't dead. It's evolved.

What's Changed Since 2024

AI Got Smarter About Context

Modern models like GPT-5, Claude 4.5, and Gemini 3 are much better at:

- Inferring intent from vague prompts

- Maintaining context across long conversations

- Asking clarifying questions when needed

- Handling ambiguity gracefully

Simple Tasks Require Less Engineering

For basic queries like "Summarize this article" or "Write a birthday message," you barely need prompt engineering. The AI just gets it.

But Complex Tasks Still Benefit

When you need:

- Specific output formats

- Consistent style across generations

- Complex reasoning chains

- Integration with workflows

...prompting skills still make a significant difference.

What Prompt Engineering Looks Like Now

1. System Instructions (Not Individual Prompts)

The shift is from crafting individual prompts to setting up persistent instructions:

Old approach:

"You are a helpful assistant who writes formally and includes bullet points in every response..."

New approach: Configure once in your AI tool's settings, then converse naturally.

ChatGPT's "Custom Instructions," Claude's "Projects," and API system prompts handle this.

2. Context Management

With longer context windows, the skill is now:

- What context to include

- How to structure reference material

- When to start fresh vs. continue

Example: Instead of cramming everything into one prompt, use Claude's Projects to upload reference docs once.

3. Chain-of-Thought Triggers

Modern models have built-in reasoning, but you can still enhance it:

"Let's approach this systematically. First, analyze the problem. Then, consider three possible solutions. Finally, recommend the best option with reasoning."

This structure yields better results than:

"What should I do about this problem?"

4. Output Format Specification

Still valuable for consistent, structured output:

"Respond in JSON format with keys: title, summary, recommendations (array), confidence_score"

Especially important for automation and API workflows.

Skills That Still Matter

1. Specificity When Needed

Vague prompts work for casual use. But for production content:

❌ "Write about climate change" ✅ "Write a 500-word article about urban carbon offset programs for a newsletter targeting city planners. Include 2-3 specific examples and a call-to-action."

2. Role and Persona Assignment

Still effective for specialized tasks:

"You are a senior tax accountant with expertise in small business deductions. Review this expense list and identify legitimate deductions, explaining the relevant tax code for each."

3. Few-Shot Examples

When you need a specific style or format:

"Here's an example of the tone I want: Input: 'The meeting is postponed' Output: 'Quick update: we're shifting the meeting to next week to give everyone time to prepare. New invite coming shortly!'

Now write a similar message for: 'The project deadline is extended'"

4. Iterative Refinement

The best results often come from conversation:

- Initial prompt

- "Good, but make it more concise"

- "Now add specific metrics"

- "Adjust the tone to be less formal"

This iterative approach beats trying to write a perfect prompt upfront.

What's Obsolete

1. Jailbreak Attempts

Modern models have robust safety measures. Don't waste time trying to circumvent them.

2. Overly Complex Prompt Templates

Long, elaborate templates with "magic words" are less necessary. Models understand natural language better.

3. Prompt Copying Without Understanding

Copying prompts from the internet worked when models were finicky. Now, understanding why prompts work matters more than memorizing templates.

4. Single-Shot Perfection

The goal isn't one perfect prompt—it's an effective collaboration over multiple exchanges.

The New Skills to Develop

1. Context Architecture

How you structure information across:

- System prompts

- Uploaded documents

- Conversation history

- Tool configurations

2. AI Tool Selection

Knowing when to use:

- ChatGPT vs. Claude vs. Gemini

- Chat interface vs. API

- Standard vs. reasoning models

3. Verification and Iteration

Developing intuition for:

- When to trust AI output

- What to fact-check

- How to iterate effectively

4. Workflow Integration

Connecting AI to your actual work:

- API automation

- Tool chaining

- Output formatting for downstream use

A Modern Prompting Framework

For complex tasks, I use this structure:

1. Role (When Helpful)

"You are an experienced data analyst..."

2. Context

"I'm working on a quarterly report for stakeholders. Here's the raw data: [data]"

3. Task (Specific)

"Identify the top 3 trends and explain their business implications."

4. Format (When Needed)

"Format as a brief executive summary with bullet points."

5. Constraints (When Needed)

"Keep it under 300 words. Avoid technical jargon."

Not every task needs all elements. Use what's relevant.

The Bottom Line

Prompt engineering in 2026 is less about memorizing tricks and more about:

- Understanding how AI models process information

- Communicating clearly and specifically when needed

- Iterating through conversation rather than crafting one perfect prompt

- Architecting context and tool configurations thoughtfully

The skill has evolved from "prompt writing" to "AI collaboration."

Is prompt engineering "dead"? No. But it's matured into something more subtle and integrated into how we work with AI systems.

The people who get the best results aren't following templates—they're thinking clearly about what they need and communicating it effectively.

That skill never goes out of style.

Related articles: