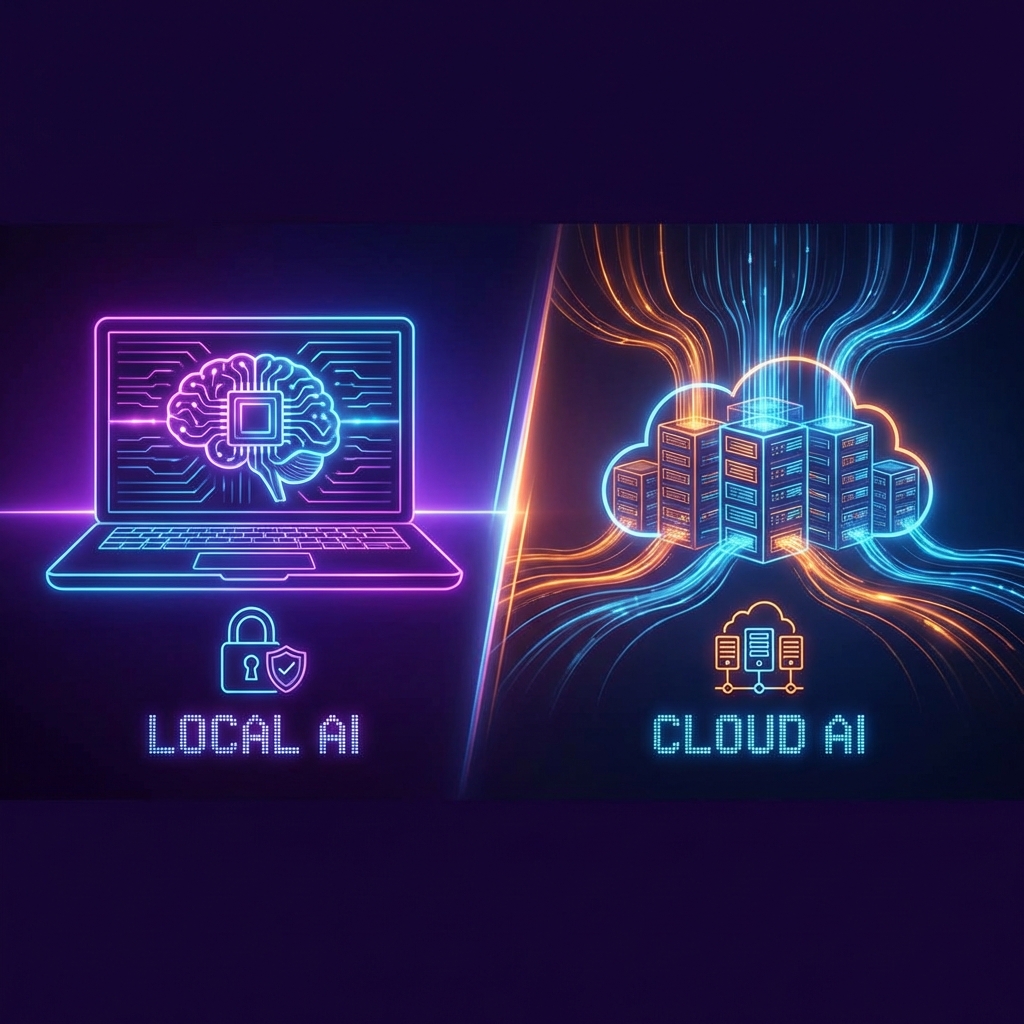

Local AI vs Cloud AI: The Privacy vs Power Trade-off

Last month I decided to try running AI entirely locally on my laptop. No internet connection needed, no data leaving my machine. Here's what I learned—and why I now use both.

What "Local AI" Actually Means

Instead of sending your prompts to OpenAI or Anthropic's servers, you download an AI model and run it on your own computer. Tools like Ollama make this surprisingly easy—it's basically one command to get started.

The models you can run locally (like Llama, Phi, or Mistral) are smaller than what the big companies use, but they're genuinely capable for many tasks.

The Privacy Case

Let me be real about why this matters. When you use ChatGPT or Claude:

- Your prompts go to their servers

- They might train on your data (depends on your plan and settings)

- You're trusting their security practices

- If they get breached, your data could be exposed

For personal chats about dinner plans, who cares? But if I'm working on confidential business documents, client data, or anything I don't want potentially becoming public, local AI is genuinely valuable.

What I Actually Tested

I ran Llama 3.2 and Phi-3 locally for a month, using them for:

- Email drafting

- Code explanations

- Document summaries

- Brainstorming

My honest assessment: for 70% of my daily AI use, local models are good enough. The other 30%—complex coding, creative writing, deep analysis—cloud AI still wins.

The Downsides Nobody Mentions

Speed: My MacBook takes about 10-20 seconds for responses that are instant on ChatGPT. If you're in a fast conversation, that adds up.

Quality: The gap is real. Local models make more mistakes, miss nuance, and struggle with complex instructions. Not terrible, but noticeable.

Setup hassle: It's gotten easier, but there's still more friction than "open browser, type question."

Battery: Running AI locally destroys your laptop battery. Plan to be plugged in.

My Current Approach

I now split my usage:

Local (Ollama with Llama):

- Anything with confidential information

- Work I'm doing offline

- Simple tasks like email help or quick questions

Cloud (ChatGPT/Claude):

- Complex coding sessions

- Creative writing

- Tasks where I need the best possible quality

- When I'm at my desk and speed matters

Should You Try Local AI?

If any of these apply to you:

- You work with genuinely sensitive data

- You're curious and willing to tinker

- You want AI that works on planes

- You're privacy-conscious

...then yes, spend an afternoon setting up Ollama. It takes maybe 30 minutes, and you'll learn something useful.

If you just want the best AI experience and don't have serious privacy concerns, stick with cloud. It's better in most ways that matter day-to-day.

The Bigger Picture

I think the future is probably hybrid. Sensitive stuff stays local, power-hungry tasks go to the cloud. The tools are already moving this way—even Apple's AI features try to run locally when possible.

Understanding both options now means you'll be ready for wherever this goes.